In this blog post we give a high-level introduction to Sharemind's new protocol development pipeline and overview the benefits that come with it.

From programs to protocols

Let's start by looking at a simplified version of SecreC code we previously saw in our auction demo blog post. The following code snippet defines a procedure that compares bids of Alice and Bob and publishes who has the higher bid and what the value of the bid is. All of this is done without revealing the value of the losing bid.

import shared3p;

import oblivious;

domain pd_shared3p shared3p;

void auctionDemo(pd_shared3p int aliceBid, pd_shared3p int bobBid) {

pd_shared3p bool aliceWon = aliceBid > bobBid;

pd_shared3p int winningBid = choose(aliceWon, aliceBid, bobBid);

publish("aliceWon", aliceWon);

publish("winningBid", winningBid);

}

At the start of the program, we have two module imports: the first module defines the shared3p protection domain kind, and the second module oblivious defines the choose function. Next, we declare security domain pd_shared3p of kind shared3p. Protection kind (in this case shared3p for additive 3-party secret sharing) can be thought of as a way of keeping secrets. Protection domain is one instance of that kind and is always associated with some concrete (physical) resources.

The auctionDemo procedure takes two private inputs aliceBid and bobBid, securely compares them and stores the resulting secret Boolean in aliceWon variable, then chooses either aliceBid or bobBid as winningBid depending on whether aliceWon is (respectively) true or false. Finally, results are published.

To execute this code Sharemind has to be able to compare the bids of Alice and Bob without anyone learning information about the bids themselves or the result of the comparison. To achieve privacy Sharemind can't rely on just local computations but needs to execute a cryptographic protocol that takes multiple rounds of communication. More than one protocol is needed to evaluate this code. The choose function internally uses multiplication, addition and subtraction and more protocols are needed even for just initializing private values.

Challenges in implementing protocols

Sharemind lends its efficiency in part to a large set of primitive, highly optimized protocols that are made available to applications. The size of this set has brought with it an issue not present in frameworks with a small number of supported operations: the set of protocols must be maintained, as new protocols are still added to it and possible optimizations for a particular sub-protocol should be propagated into larger protocols.

Our protocol set used to be implemented in a simple C++ framework. It was designed to allow a single protocol to be executed on many inputs at a time (SIMD style) but parallel execution of two different protocols was only realizable by interleaving both protocols and manually packing all network messages together. Doing so was very time-consuming, even for medium-sized protocols. This approach lead to unreadable protocols, and difficulties with maintenance and introducing modifications. Fixing a bug meant making changes in every place the modified protocol was copied. In the end, well optimized protocols ended up being unreadable, unmaintainable and slow to implement. Thus, most protocols were not optimized and were thus slow.

A typical protocol is implemented as a composition of smaller ones and rarely are new fundamental protocols created. For example, floating-point operations are usually implemented in terms of fixed-point operations, which in turn, employ integer and bit-manipulation operations.

Usually some parts of a protocol can be executed in parallel. When implementing the protocol, it's essential that these parts actually are executed in parallel to minimize the number of communication rounds. Not doing so will drastically reduce performance because of network latencies involved.

One possible way to achieve this parallelism automatically is to have access to the data-flow structure of the protocol. This allows for network messages that do not depend on each other to be sent during the same communication round. We achieved this via implementing a domain-specific language. Using this language high-level protocol descriptions are compiled to low-level form where data-flow is explicit.

Protocol development pipeline

Protocol language is high-level language for constructing low-level protocols. To better understand the development process of protocols, let's look at implementing a protocol for adding two integers. In this protocol no network communication is involved. The code looks as follows:

parties 3

// This is polymorphic function that adds two n-bit integers

def add(u: uint[n], v: uint[n]): uint[n] = u + v

/* Code is generated for 32-bit and 64-bit (unsigned) integers: */

protocol add32(x: uint[32], y: uint[32]): uint[32] = add(x, y)

protocol add64(x: uint[64], y: uint[64]): uint[64] = add(x, y)

At the start of the file, we need to indicate the number of parties our protocol expects. In this example, we have 3 computing parties. The default mode of operation in the protocol DSL is that every computing party executes the same code. Meaning in this example, all three parties perform exactly the same way and no network communication is involved. After declaring the number of parties, we define a function named add that takes two n-bit unsigned integers u and v, and returns an n-bit integer. The function is defined to evaluate to the sum of the arguments. As the last step we declare that intermediate code is to be generated for two protocols: 32-bit and 64-bit addition.

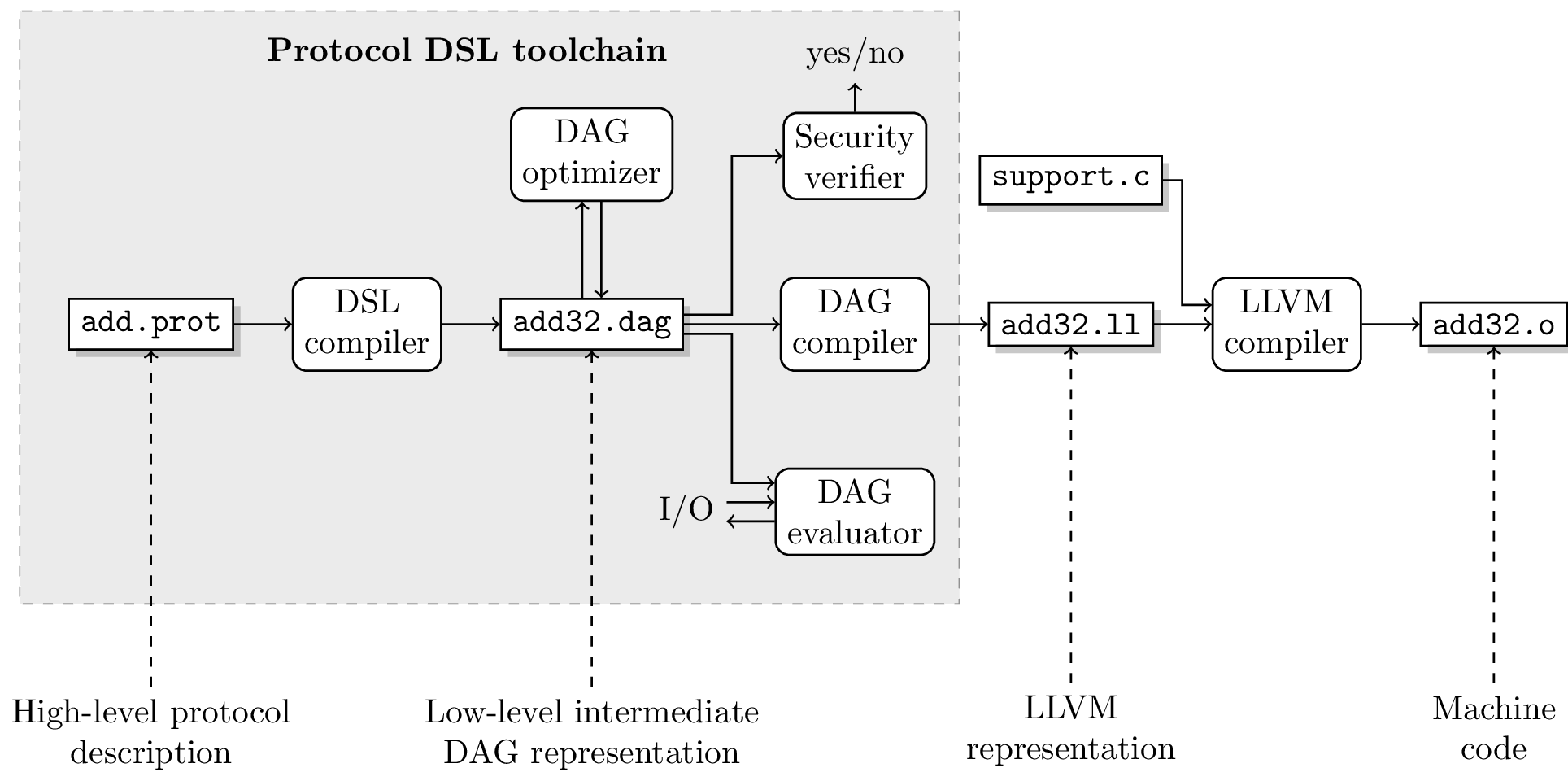

The figure above gives an overview of how the protocol DSL toolchain can be used to compile, evaluate or analyze the current example. Compiling the file will produce an intermediate code file for each protocol declarations. Two files add32.dag and add64.dag are produced. Each of the generated DAG (directed acyclic graph) files can be optimized, evaluated for testing, analyzed for security, and can be compiled to machine code via the LLVM toolchain. The object code can be linked to Sharemind to provide a protocol that works on vectors of inputs (SIMD style).

The intermediate DAG has a textual representation, but it's very difficult to glean any information from that directly because even moderately small protocols compile to rather large amount of code. The addition example at hand is sufficiently small but also very uninteresting because all 3 parties work independently. The 32-bit addition compiles to the following DAG:

v0 = input 1 u32,1

v1 = input 1 u32,2

v2 = input 1 u32,3

v3 = input 2 u32,1

v4 = input 2 u32,2

v5 = input 2 u32,3

v6 = add u32,1 v0 v3

v7 = add u32,2 v1 v4

v8 = add u32,3 v2 v5

output 1 v6

output 1 v7

output 1 v8

Each operation of the DAG is annotated with a type of its result. Type is composed of the underlying data type and the party number indicating which party owns that value. For example, the input 1 u32,1 operation results in a 32-bit value v0 for the first party. The first number in input 1 u32,1 denotes that it returns first input of that party. Similarly, output 1 v6 indicates that v6 is the first output of party that holds v6.

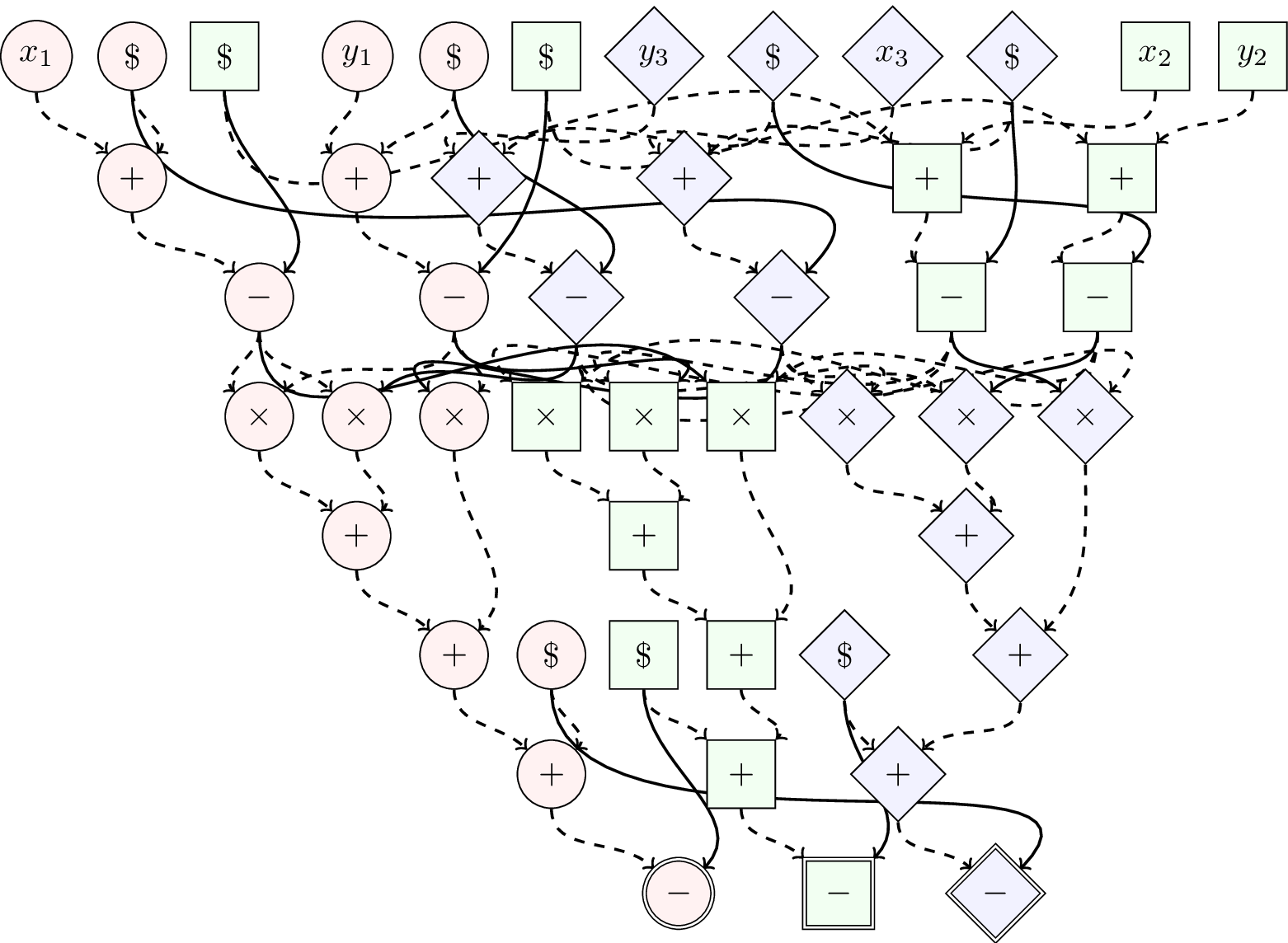

It's more interesting to visualize the intermediate example as a graph. In the example below, we have the Sharemind multiplication protocol in its intermediate form. Each node of the graph indicates computation and edges indicate flow of data. Different colored (shaped) nodes indicate different computing parties. Solid edges indicate network communication from one party to another. Nodes with dollar signs denote random number generation, nodes x and y are inputs and nodes with double edges are outputs.

Looking at all paths that lead to any output we can see that they contain at most 2 solid edges. This tells us that this protocol takes 2 communication rounds. However, we perform an optimization that eliminates the need to send random values over the network reducing this protocol to fit into a single communication round. Instead of one party sending a random value to another party both participants simply generate common random value using previously agreed upon seed.

To compile a protocol specified in our DSL to an arithmetic circuit, all function calls are inlined and the code is converted to monomorphic form. If the control flow of a protocol requires some information about data known only at runtime, such as public inputs or lengths of dynamic arrays, then this protocol cannot be fully specified in the protocol DSL. In those cases, the protocol can still be written in C++ or SecreC, either fully or with some parts implemented in the DSL.

We have implemented our own custom optimizer (in addition to what LLVM does), because LLVM is not able to optimize across network boundaries and lacks specific domain knowledge. For example, in some cases we can automatically detect if random numbers are not needed for security and can thus can be replaced by 0 values (enabling further optimizations). This situation can occur when some arguments of a protocol are constants.

Bit-shift right protocol

A good example that demonstrates many of the features of the protocol language is the bit-shift right operation. It demonstrates how even a relatively small protocol can use more than one internal representation and use multiple other protocols and helper functions. We do not cover all the details of the example below, it's mostly intended to demonstrate features of the language:

def shiftr[8 <= n](u: uint[n], s: uint[8]): uint[n] = {

let

u = bitextr(u)

vs = map(\i -> u >> i, countUp(0))

bs : uint[n] = chVector`[l = 0](s)

rs = zipWith(\v b -> conj(v, lift(b)), vs, bs);

xorToAdditive(xor(rs))

}

The protocol takes an integer u and the value to shift by s and it returns the shifted value. All inputs and outputs are additively shared. The shift amount is strictly required to be 8 bits long and the input must be at least that many bits long. Notice that this protocol is polymorphic in the sense that it can work on n-bit numbers regardless how large the n is. This protocol is directly exposed from SecreC for 8-, 16-, 32- and 64-bit integers but some other protocols may use it on larger inputs.

The implementation is quite compact. We first convert the input to XOR shared form by bitextr and compute all possible n shifts (lines 3 and 4). Next, we build an n-element bit vector where the i-th bit indicates whether the shift is by i bits (line 5). Finally, we mask away every shifted value if the respective bit is 0 and XOR the results (line 6). This yields a XOR shared result that we convert back to additive form (line 7).

In the implementation we have used 4 protocols: bitextr, chVector, conj, and xorToAdditive. Additionally, we have used many useful (some higher order) functions: map, countUp, lift, zipWith, and xor. All of those are re-useable components implemented in the protocol DSL language itself.

There are few opportunities to exploit parallelism in this protocol. Values of variables u and bs can be computed at the same time, and all conjunctions needed to compute rs can also be performed at the same time.

Benefits of protocol language

Because protocols are compiled to a machine-readable form it's easy to implement new tools that either transform protocols or tell us some information about them. In fact, much of the benefits that the protocol language brings stem from the improved tooling.

Ease of development: Adding new protocols or changing existing ones used to be a daunting task in Sharemind. Protocol DSL has made this much easier in multitude of ways.

Because protocols now compose well the programmer does not have to manually parallelize protocols and can rely on everything that is not data-dependent to automatically be executed in parallel.

It is now very easy to create protocols that work on larger than 64-bit values. This is often useful for many floating-point protocols (also integer division). It's easier to get started with protocol development. There is no need to set up Sharemind installation and testing and debugging of protocols can happen on a single machine.

The amount of code dedicated to protocols is now significantly smaller. For example, integer division protocol spans only 45 lines of code whereas in our C++ framework it used to take 1500 lines of code (while only supporting 32-bit integers). Less code helps with refactoring, debugging and testing.

Improved security: We have implemented a tool that checks if a given protocol in its IR form is secure. All protocols implemented with DSL are proven to be secure and roughly 90% of all non-trivial protocols are implemented using protocol DSL and have thus been verified to be secure.

Improved performance: The performance of most of Sharemind's secure operations has drastically improved. This is both thanks to automatic optimizations that DSL facilitates and also because it's easier to integrate new optimizations to underlying protocols.

All floating-point protocols are now over 4 times faster (on large inputs) and many are over 10 times faster. Large number of integer operations are now three times faster than respective protocols that were purely implemented in C++. Much of the performance advantage of new integer operations come from automatic optimizations. Also, a significant portion of improvement to floating-point protocols can be attributed to new techniques we have developed. While floating-point protocols have improved in performance, they are also now significantly more accurate.

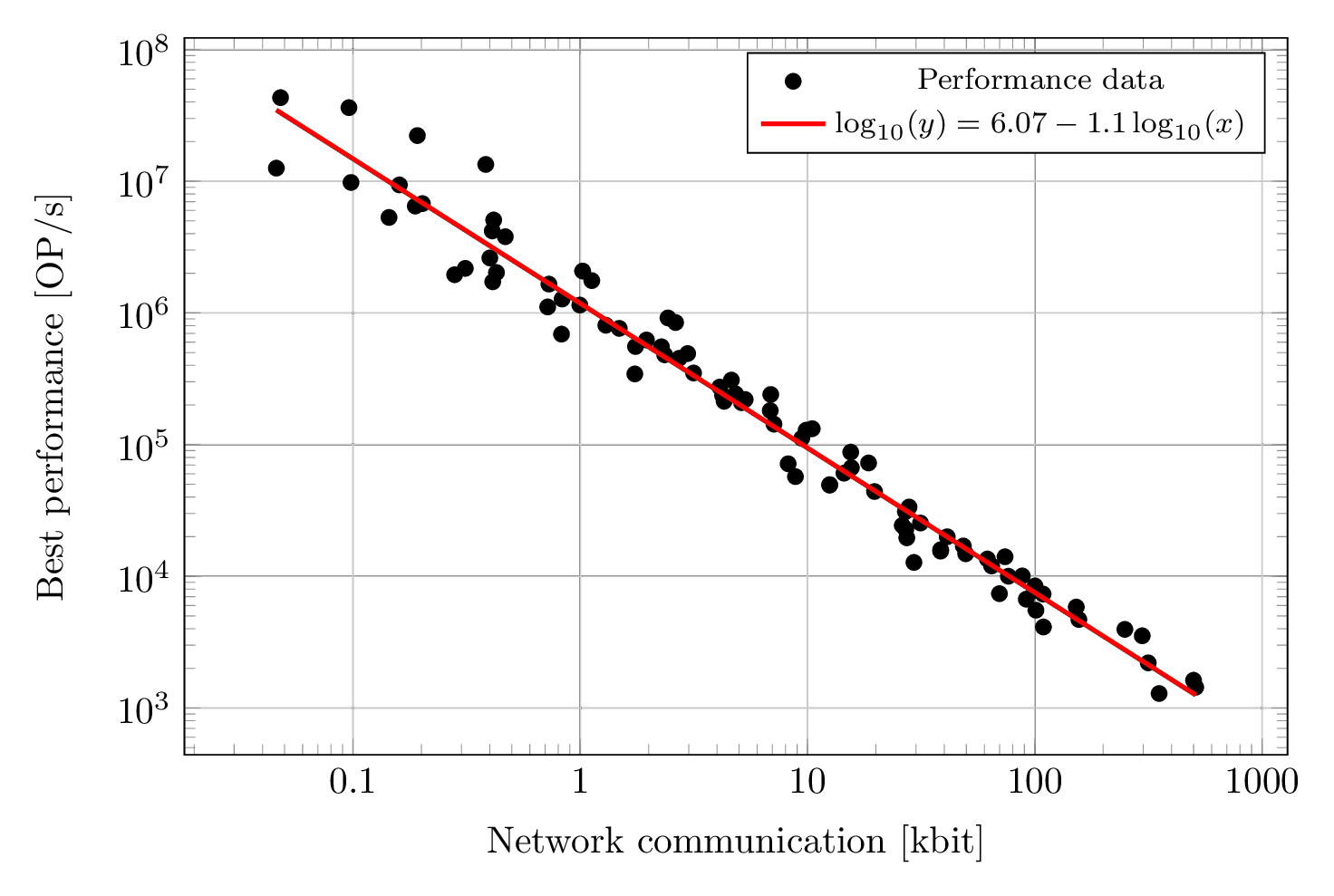

Other nice things: Even without running the protocol, we can tell how many bits a protocol sends over a network or how many communication rounds it takes. This allows us to predict performance of protocols very precisely. For example, the following graph relates protocols' best performance (maximum number of vectorized operations per second it can achieve) to its communication cost (in kilobits). We can see that performance can be very well estimated from communication cost.

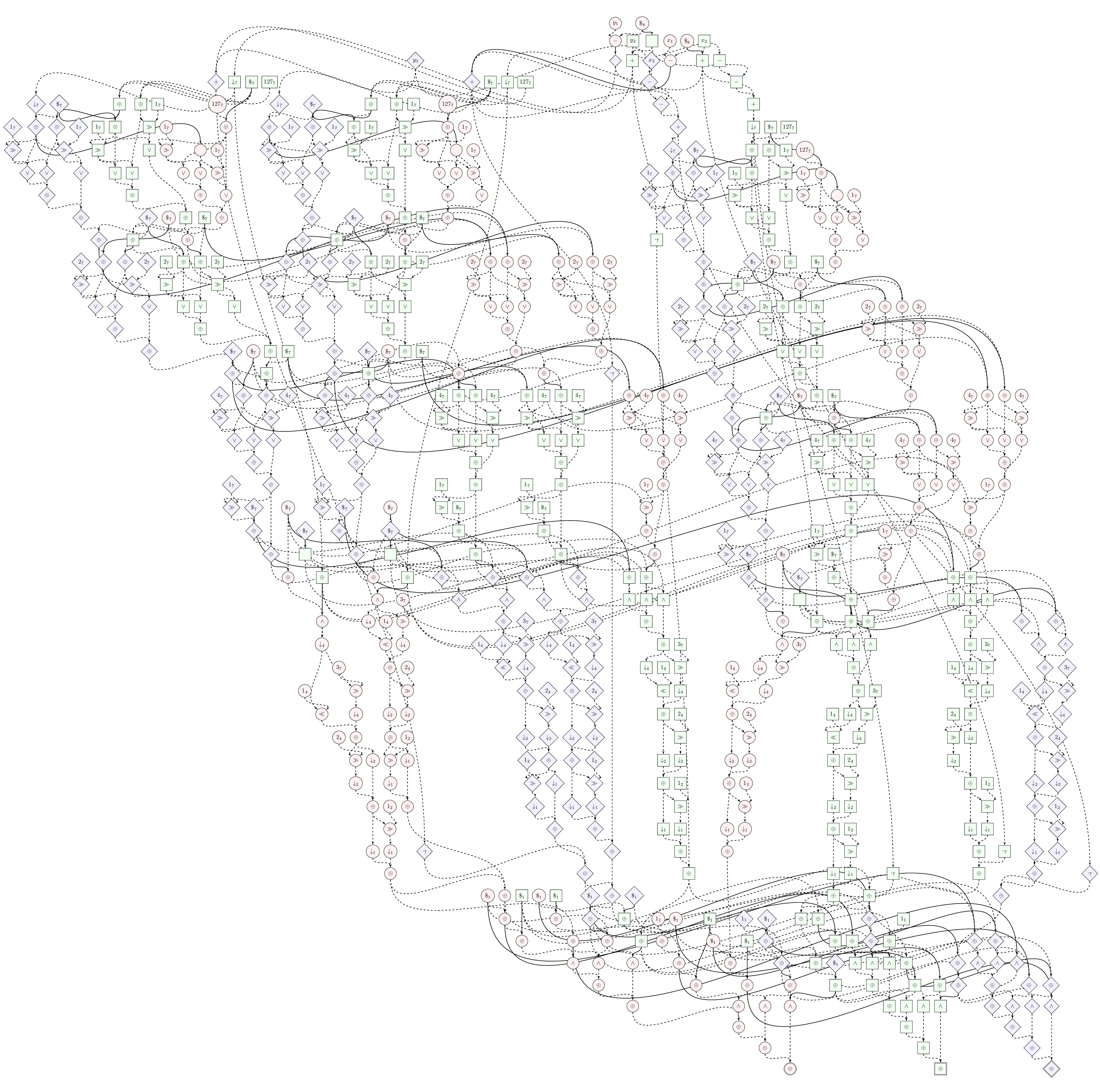

We can also draw pretty pictures! Below is a visualization of the intermediate form of our 8-bit comparison protocol. This is one of our smallest protocols.

Technology

Protocol DSL and the surrounding toolchain uses a lot of cool technology. The compiler and security analyzer are implemented in Haskell, the DAG optimizer in Prolog. We use Z3 theorem prover for type checking. LLVM and Clang are used for compiling DAGs to binary.