We have made it our mission to improve how computers and software systems work with data. Systems that process personal data have to change fundamentally and this is one of the things that Data Protection by Design teaches us.

Jason Cronk, also known as @privacymaverick, is a harbinger of this change. With intimate understanding of both privacy regulations and how today’s enterprise handles data, he is well positioned to tell us more about the role of technology in achieving privacy.

Article 25 of the much-ballyhooed General Data Protection Regulation (GDPR) concerns making data protection a forethought not an afterthought. Though it doesn’t reference it, Data Protection by Design and Default is clearly based on Ann Cavoukian’s 7 foundational principles of privacy by design, something she created during her seventeen-year tenure as the Information and Privacy Commissioner of Ontario, Canada. Her conceptualization of privacy by design were later unanimously adopted in a resolution of the 2010 Conference of Data Protection and Privacy Commissioners. She had clearly been influenced by her early exposure to privacy enhancing technologies (PETs). Two principles illustrate this exposure:

- Principle 3: Privacy Embedded into the Design

- Principle 4: Full functionality; Positive Sum Not Zero Sum

Principle 3 mirrors the intertwined nature of PETs. For example, secure multi-party computation is not something you can “bolt on,” in Ann’s vernacular, after the fact. The privacy enhancing nature of Tor, a mix network, is fundamental to its design. Unlike TLS (Transport Layer Security, the technology that keeps our internet traffic safe), the fundamental privacy and security aspects of PETs cannot be turned off or downgraded.

Similarly, Ann saw the potential for PETs to eliminate the trade-off game that so often plagues debates on privacy and data protection. Principle 4 shows her awareness of PETs, which, by enhancing privacy, allows one to achieve desired results while maintaining the confidentiality of the underlying data. Naively, most developers think you must have access to data to perform calculations on that data, therefore excluding it from collection or denying access to it, necessarily denies desired functionality. PETs, like homomorphic encryption, allow just that sort of magic that most developers can’t fathom because they haven’t been exposed to it.

Article 25 of the GDPR alludes to the use of PETs, though it doesn’t go so far as an outright call for them. Language such as paragraph 1’s “Taking into account the state of the art” call upon those processing personal data to be aware of the cutting-edge technology that is found in most privacy enhancing technologies. Further, the drafters call for organizations “to integrate the necessary safeguards into the processing” of personal data, echoes the embedded nature of PETs (as well as Privacy by Design’s 3rd Principle).

In my forthcoming book, I use a four point methodology for achieving privacy by design. This is not meant to suggest that this is the only methodology, just the process I use. It is based in part on the work of Professor Jaap-Henk Hoepman and his Privacy Strategies and Tactics. (Strategies are identified in the definitions below in bold and small capitals.)

architect - Minimize personal information and Separate data into domains.

secure - Hide information from external and internal actors without a need to know. Abstract data to avoid unnecessary detail.

supervise - Enforce policies and processes around appropriate use of data. Demonstrate compliance with those policies.

balance - Inform individuals and give them Control to provide balance between them and the organization.

While many of the strategies are fairly straightforward (destroying data or encrypting data for instance), PETs usually cross the architect and secure boundaries. Mix networks, like Tor, use encryption to tunnel data through intermediaries’ nodes. This minimizes data available to each intermediary (only immediate source and destination), separates data by node (only knowing where their packet came from and went to but not the full path), and hides the underlying communications using encryption. Similarly, secure multi-party computations minimize personal information shared and separate it by domain (the parties in the multi-party protocol).

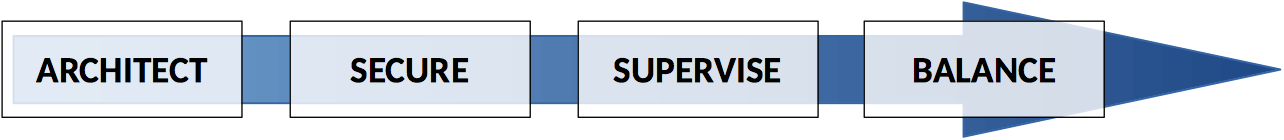

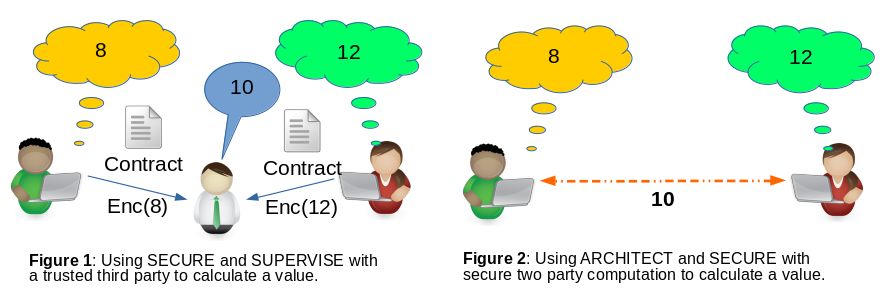

This is what makes PETs difficult for the average developer to grasp. Consider a scenario where two individuals want to calculate the average of their inputs. The seemingly reasonable assumption most people make is that the two parties must share their values, if not with each other, then with a trusted third party. They might secure the data in transit with encryption or supervise the trusted third party with a contract but they don’t realize that by using a secure two party computation they can architect and secure the system without relying on a trusted third party.

Utilizing controls on the left side of the methodology (architect and secure) as opposed to the middle two (secure and supervise) reduces privacy risks, because it reduces a threat actor’s opportunities through the minimization of data available to them. PETs are a powerful, but underutilized, tool in the privacy engineer’s arsenal which allow for the reduction of privacy risk without foregoing functionality.