In this two-part blog post, we are answering an often-asked question - is Sharemind anonymisation? Or is it something better? Is this comparison actually valid? Triin and Dan combine their legal and technical know-how to tell you more.

Part 1: What is anonymisation and how can you use it?

De-identification is one of the most widely used approaches for protecting the privacy of individuals. It encompasses several ways how to disconnect a person’s identity from a set of information, including pseudonymisation, encryption and anonymisation. Why is de-identification useful in the context of privacy regulations?

Since the EU’s General Data Protection Regulation (GDPR) started to apply on May 25, 2018, it is geared to encourage even more interest towards de-identification technologies. GDPR Article 11 provides flexibilities for processing personal data in de-identified form. According to GDPR Recital 26, if the process of de-identification results in anonymous information, then the principles of data protection should not apply and GDPR does not come into play with regard to further processing of such anonymous information. GDPR also promotes pseudonymisation and encryption throughout its text.

Therefore, while processing de-identified information can help better maintain and protect the privacy of individuals, it can also help save resources because the legal requirements for processing de-identified information under the GDPR are more relaxed compared to the standard legal requirements for processing information in identifiable form.

Anonymisation from the technical perspective

According to the common understanding, anonymisation is about reducing the information or adding noise to personal data. This can be done either by removing certain values from a set of identifiable data (generalisation) or by replacing them with random data (randomisation). Noise addition may occur at the input level (anonymised database) or at the output level (anonymised query result).

The party performing anonymisation on the data needs full access to it themselves.

In case of anonymised inputs, the typical methods used are k-anonymisation, l-diversity, t-closeness, rule-based anonymisation, aggregation and private disclosure. For anonymised outputs, differential privacy is the most common technique. However, all these technologies today require that anonymisation is performed with unrestricted access to the database. Also, some anonymisation methods are more complex, making it harder for them to be applied automatically (e.g., by the data owner). Research is ongoing to find ways to achieve this goal.

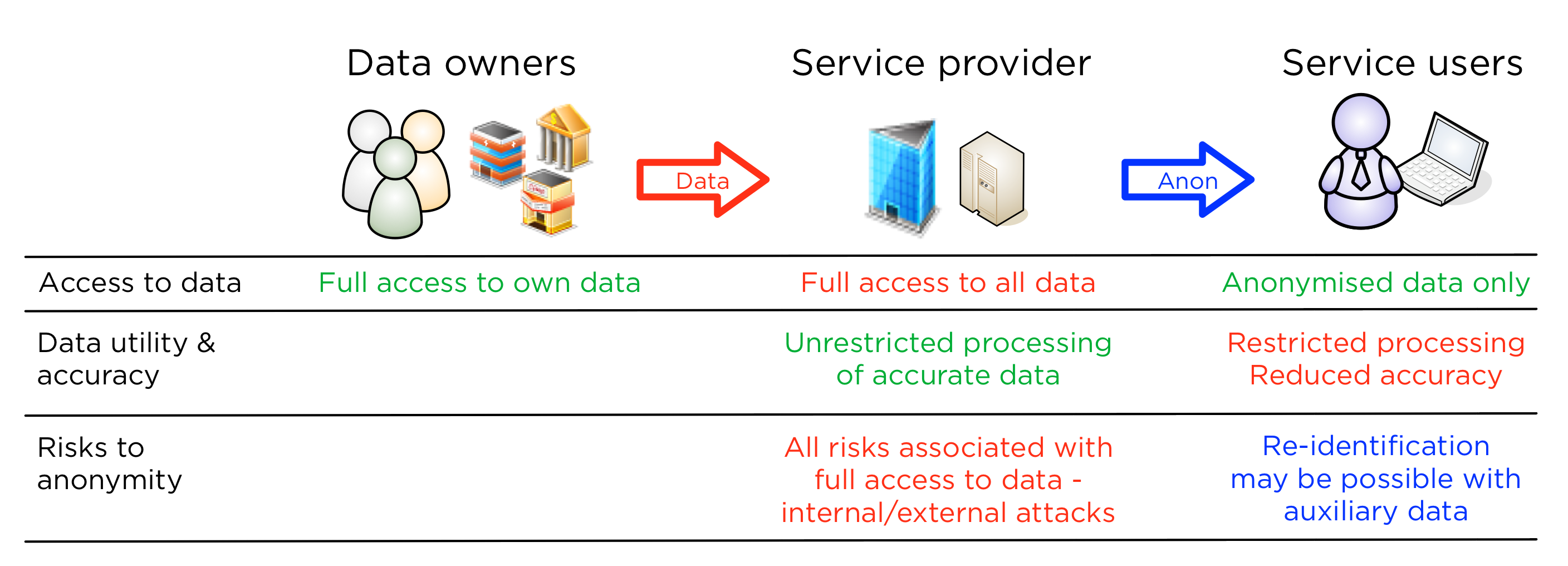

Let us illustrate the usage of anonymisation with a scenario. A data-driven service provider is collecting data from multiple people or organisations and making the data available to its customers through a querying interface. We compare two cases where anonymisation is used.

Anonymisation at the service provider

Here, we assume that the service provider collects identifiable data from multiple sources and processes it to offer insights or statistics to customers. It is relatively straightforward to implement, as the service provider can outsource the anonymisation process to a third party company.

This scheme has the following properties

- Data owners have to trust the service providers without getting strong privacy controls.

- The service provider has full access to data and must protect it from internal and external attacks and achieve full compliance.

- The customer can perform queries to the anonymising system and will have to agree not to try and re-identify records.

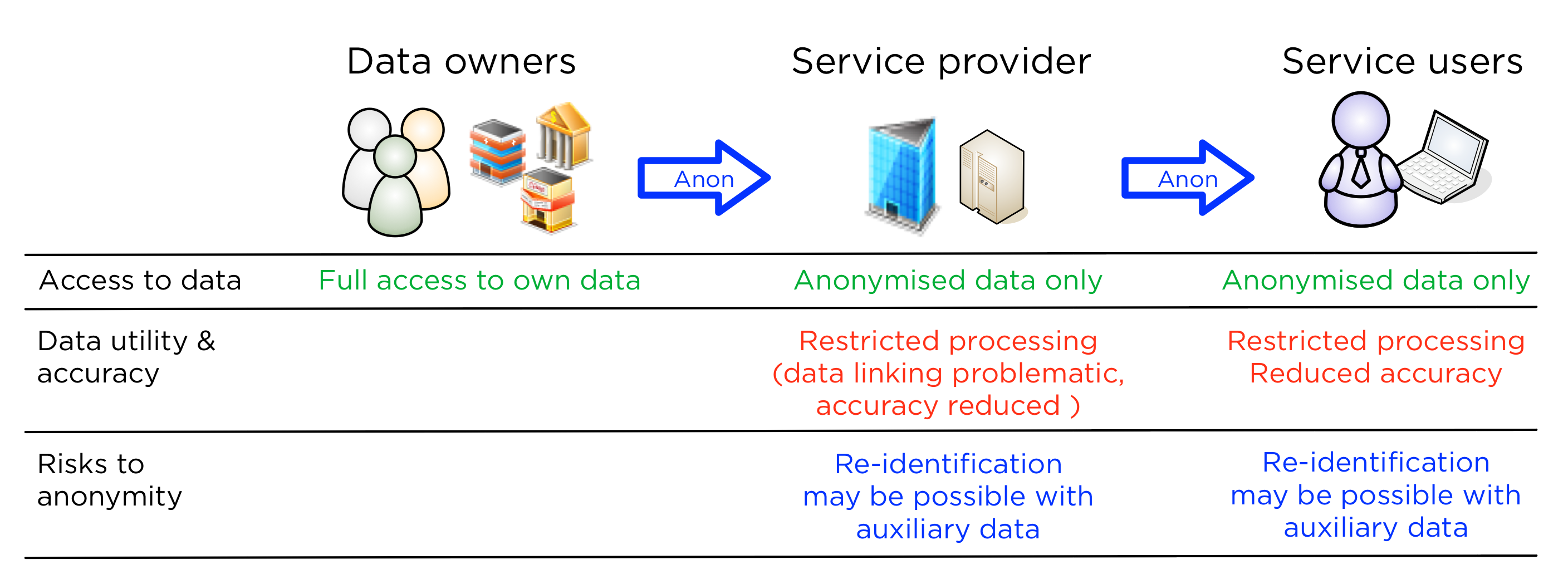

Anonymisation at the data owner

While this solution achieves better privacy, it is also more complex to build as anonymisation has to be performed at the data source. Outsourcing anonymisation at the data source to a consultant or an expert company will lower the security level. Wasn't the goal to get better privacy by keeping the private data at the source?

This scheme has the following properties

- Data owners get better privacy controls, but are responsible for performing anonymisation (may not be an automated task).

- The service provider no longer has full access, but anonymisation schemes may also make certain processing (e.g., linking multiple databases) impossible. Especially if identity records are suppressed or randomised by the data owner.

- The customer can perform queries to the anonymising system as before, but may receive data with an even lower accuracy due to restrictions to the analysis process.

Anonymisation from the legal perspective

From the legal perspective, anonymisation is still a concept in flux. This becomes especially evident from discussions between lawyers and software engineers - while the term “anonymous” in the GDPR seems to be an over-arching, technology-neutral term for lawyers, technologists tend to treat it as a subset of the broader concept of “de-identification” [1]. At the same time, Article 29 Working Party (29WP) considers anonymisation as a result of processing personal data in order to irreversibly prevent identification, as opposed to other means of de-identification, such as pseudonymisation, in which case re-identification is still possible [2].

The 29WP approach has already received some criticism among researchers and privacy consultants [3]. In addition, the 29WP in its opinion seems to ignore alternative anonymisation techniques altogether, although it is not in line with the technology neutrality principle provided for in GDPR Recital 15.

When closely reading the text of GDPR Recital 26, it appears to be opening the doors for a more liberal conceptualisation of anonymised information. Namely, GDPR Recital 26 defines anonymous information as “information which does not relate to an identified or identifiable natural person or to personal data rendered anonymous in such a manner that the data subject is not or no longer identifiable”. The second part of the definition, “personal data rendered anonymous in such a manner that the data subject is not or no longer identifiable”, presumes that the underlying personal data is still related to identified or identifiable natural persons but it is processed in a way that the data subject cannot be identified.

Note the wording “is not or no longer identifiable” in GDPR Recital 26. It is different from the preceding Data Protection Directive Recital 26 wording in the same context: “data rendered anonymous in such a way that the data subject is no longer identifiable”, where the words “is not” are missing. This indicates that the authors of GDPR did not wish to limit the concept of anonymisation to irreversible de-identification only (“no longer identifiable”) - they accept other means of anonymisation where data subject can remain anonymous to some parties in a specific situation (relative anonymity) but not to everyone in all conceivable cases (absolute anonymity).

The GDPR has broadened the concept of anonymisation.

This means that the GDPR has broadened the concept of anonymisation. One might even call it the start of the age of Anonymisation 2.0. Not only does it involve anonymised databases and anonymised query results but also other means of de-identification, which we will discuss in the second part of this blog post.

The risk of re-identification

Practitioners and regulators are realising there is no permanent and irreversible anonymisation technique. All means of anonymisation are subject to the risk of re-identification to some extent. For this reason, it can be misleading to consider anonymisation only as permanent de-identification.

For anonymised databases, the risk of re-identification may be easily realised if there are auxiliary information sources available, against which an attacker may compare the information in the database and thus generate correlations to identify individuals covered in the database. For an anonymised query result, the underlying database against which queries are made is fully accessible to the query service provider, even if it remains hidden from the enquirer.

Having said that - reidentification stories are famous in research literature, but the skills to do so are not common. Reidentification may also be defeated by the data model. To conclude, successfully deploying anonymisation requires a Privacy Impact Analysis with the focus on auxiliary information and the privacy impact of leakages at the service provider.

In the second part of this blog post, we will learn how Sharemind fits into the picture and improves it.

[1] S. Schiffner, B. Berendt, et al. Towards a Roadmap for Privacy Technologies and the General Data Protection Regulation: A transatlantic initiative. To appear in: Proceedings of the Annual Privacy Forum 2018, 13-14 June 2018, Barcelona. Springer, 2018 - Available in the Internet at: https://www.esat.kuleuven.be/cosic/publications/article-2909.pdf

[2] Article 29 Working Party. Opinion 05/2014 on Anonymisation Techniques. 10th April 2014 - Available in the Internet at: http://ec.europa.eu/justice/article-29/documentation/opinion-recommendation/files/2014/wp216_en.pdf

[3] A. Kassem, G. Acs, C. Castelluccia. Differential Inference Testing A Practical Approach to Evaluate Anonymized Data. [Research Report] INRIA. 2018. - Available in the Internet at: https://hal.inria.fr/hal-01681014/